Engineering's Log

--L❤☮🤚

Update D.16

10:20, we just delivered a care package to vulcan, adding a demo of slider - bitmap layer interaction. We have also incorporated the version into the attribution for quick reference.

02:46, we revamped the logging mechanism for plasmo cli to have some standard indicators - this will be released in 0.18.0. We will be integrating bpp into our cli template. Perhaps as an optional feature flag?

01:20, found a minor bug within the webhook regex replacement for our link. Local markdown link should now functional.

00:40, we are in the process of designing the plasmo login command schematic. Since we are using firebase as the foundation for the itero settlement, we will need to leverage the firebase auth SDK to integrate OAuth to plasmo login. The tricky part here is to figure out how to properly store the firebase configuration within the CLI.

00:35, we sent a minor update to the cli base to handle issue with content script css path resolving. We have also fixed a bug within background script watch that prevented it from importing modules properly. This will be released in plasmo@0.17.0 - try it out with pnpm dlx plasmo init

00:20, we are heading back toward the plasmo planet to facilitate an important trade route between the itero and cli bases. For this mission to success, we will need to design a proper authentication scheme.

2022.04.30

16:00, we were live.

11:35, we were able to crack the problem with iteracting with iframe elements. It appears that for most element, the host user must interact with it first. Thus, mice will need to be selective with what element it can touch inside the iframe. For our usecase, we are focusing mainly on HTMLVideoElement. We have made it such that mice will look for these element, and toggle their playback after the host had started playing. We used a simple watch over the chrome storage module to get down to the iframe problem.

05:40, we have added package.json watching as well as dynamic assets generation in response to directory changes. We have also moved a lot of our scaffolding code into the centralized manifest class, which allows for simpler calling procedures. These will be up in 0.16.0 as well.

04:30, we are adding background script support to plasmo dev. This feature should be available in 0.16.0.

03:40, after investigating our options to send data to another content scripts for mice, we realized that the iframe is actually in a separated context from its current webpage. We are investigating background scripts. Potentially, we can just use the storage API to send data cross contexts - which would be the easiest option. However, we are not sure if this is the correct answer.

2022.04.29

05:00, we have resolved the issue regarding the config parsing, this will be published in 0.15.0

03:24, we were notified that there was a bug within Plasmo config parsing mechanism. We are now revisiting this sector to make sure we are properly checking the file content before adding it into the manifest.

2022.04.28

20:00, we added a GeoJSON example to vulcan

06:34, we fixed a pipe leak with our webhook - discord integration, extending our log system to support our business logs.

06:15, we came to the conclusion that static analysis alone will not sastisfy the need of truly dynamic content script configuration. The course we will need to set for this work would likely be to either evaluate the ts file via a full typescript program, loading in the tsconfig file, and resolves any import modules referenced into the final value. The usecase for example, is when importing a variable from outside the current module, and compare the value of that variable to some static data. Another way of solving this problem, is to introduce a background worker script that dynamically load content script based on the specification. We will need to establish a websocket connection with the background script to properly send the right configuration over. It will still be quite tricky for the content script configuration to be dynamic...

05:04, we have just tidied up the reception deck for our ship. It is now open for the public: link. We also setup short link redirection for our social uris.

02:00, we have successfully able to parse the AST tree shallowly to gather the base configuration for content script. However, I foresee a scenario where developer might need to import a central configuration, outside of the current script - this would requires deep lookup, OR a full ts program compile. I think we will consider the full ts program once we have a better understanding of how we can ineropt with the ts build cache already in place within the project (likely leveraging parcel). But for now, maybe a one level traversal would do the trick?

2022.04.27

14:00, we detected some leakage within our thruster. Apparently, the TextRotation component that we used for our top most header does not render the text on SSR. We tried to fix this component to be SSR-aware, but that broke the hydration process. The simplest fix we landed upon is to remove any text rotation applied to our headings. This indicates we should only use this component sparingly.

13:10, we have so far managed to parse the content script AST using the typescript compiler. We are now en-route to further reducing the AST into the desired config for the plasmo manifest.

2022.04.26

17:44, we noticed something is wrong with the cache updating of our Update function. Will need to check whether it is writing to the right state file.

10:00, we are resuming course toward mice and the dynamic content script property injection. We are investigating whether we should parse the content file somehow, either using typescript compile for the AST (to grab its type structure), or execute and import that script. This will require a couple experimentation.

04:00, we sended extra care package to vulcan, including coordinate sending, coordinate controller, and date slider. We also incorporated icons from iconoir to make it fun.

2022.04.25

21:30, we are taking a break today to send a care package to UA while also landing down for a short shoreleave.

2022.04.24

21:30, we landed 2 care packages last week: iconoir#142 and parcel#7969.

20:15, we found a solution for the export of typing - apparently the pnpm setup obfuscated how tsup would resolve the type. However, it is still able to resolves the deep path. Thus, by providing the explicit path to the type files, we were able to proceed with creating a declaration for developers to consume - our ship is now full steam ahead on this new found capability!

19:30, we are investigating the compilation of a typescript library file for plasmo-cli. So far, it has been a futile endeavor - it seems the typescript compiler was not able to find the typing for the internal packages. We are investigating further with more experiment, this will take a while.

08:00, we have revamped some styling for the log and docs as a leasure activity. We shall now resume course toward mice iframe handling.

06:23, we added a couple more documentation for the plasmo framework under fronti for ease of reference. In the future we shall make a simple middleware to redirect the docs to their new appropriate home.

03:30, we refined the regex for the fronti discord webhook to properly parse and handle local URLs. This should now render properly: link

2022.04.23

23:30, we added a /social endpoint to fronti for simple public linking.

23:15, after a long rest, we are back to do some more iteration on mice. itero might need to wait for a bit before we can spend some time tracking down the bug with our hashing cache. There is an interesting problem on mice that would lead to another feature for plasmo dev - dynamic content scripts. When clicking iframes, it is required that the iframe itself also has the content script injected.

19:50, demo day - we were live.

2022.04.22

Update C.15

22:00, we are re-investigating Itero and the issue it has with refreshing the policy hash upon unloading a new cargo on the store. My main hypothesis right now is that teh hash refresh call might have been done too early, causing the result of the policy fetching for hash calculation to be the old one. We should also monitor and refine the update flow for cli.

09:55, while testing out mice for our upcoming demo, we noticed an issue wherever there are 2 extensions in dev modes are loaded at the same time. It seems their injected service worker (which was used to handle live-reload) broke their compilation order, causing the logic to bleed and overode each other's code. We are not entirely sure about how to resolve this in the future beside having a notice for developer to toggle only 1 extension as active that is under dev-mode. We might need to patch the internal parcel bundler to ensure its content script behavior is terminated on dev server shutdown.

08:30, field test of plasmo dev for popup and contents are both functional. There is a slight hiccup when it comes to adding a new option script, which should be addressed in plasmo@0.9.0.

08:18, apparently after revamping the build system, our cli got some regression regarding ESM import in our bundle. I am seriously considering the prospect of bundling our module as pure ESM for distribution. That would reduce the bundler size even more, however it would require latest nodejs version. We confirmed that a pure ESM CLI will work from our experience with gcp-refresh. For the time being, we have upgraded to plasmo@0.8.0.

08:00, we have abstracted away our manifest construct into a class that will be used to generate and manipulate our dev project in-memory. The utility of this abtraction is quite powerful - we are now able to reuse the same instance on initialization, and on change detection. We have added the basic, most coarst handler for extension development. plasmo@0.7.0 should have these updates, which should allow us to dogfood for an internal log translator extension.

00:51, we just wrapped up the basis of the watcher implementation for plasmo dev. As optimizing the caching behavior of the watcher turned out to be quite an entertaining task, it became a slight distraction. I think we have spent a good chunk of time here in this coordinate, and now is a good time to resume our course, leveraging this watcher to automate augmented warp generation.

2022.04.21

20:00, we encountered an issue with itero silently fail when uploading a zip without immediate manifest.json. We should mitigate this with a simple alert.

19:50, after testing out the environment passed into the registry, we found that our executable simply does not run. Thus, the simple solution for this would be to patch executable on setup so that it suppress the console. We should isolate this experiment so that it can be test with minimal effort however (without having to integrate with itero at all). We shall table this issue.

19:40, investigating on how to get our itero background process to not show the console. There are several options:

- Use the UV_PROCESS_WINDOWS_HIDE env

- Tweaking the executable header

- Use a native module to toggle ShowWindow at runtime

- Use a VB Script

For itero, since we have other modes that requires the console to be visible, we needed a flexible option. Use a native module at runtime seems overkill, as well as tweaking the exe header. Making a vb script seems easy, but it's a bit dirty. I think the best option is to use the environment variable, which we can pass to the window registry.

2022.04.20

22:30, we found some edgecases regarding handle faulty installation of plasmo cli. We might need to revisit the manifest checking to ensure it has everything needed for a smooth run (as well as recovery from disaster). In the back of my mind however, I think that it is possible to resolve this issue once we implement the manifest watcher, which would automatically be fault-tolerant toward change in the project structure.

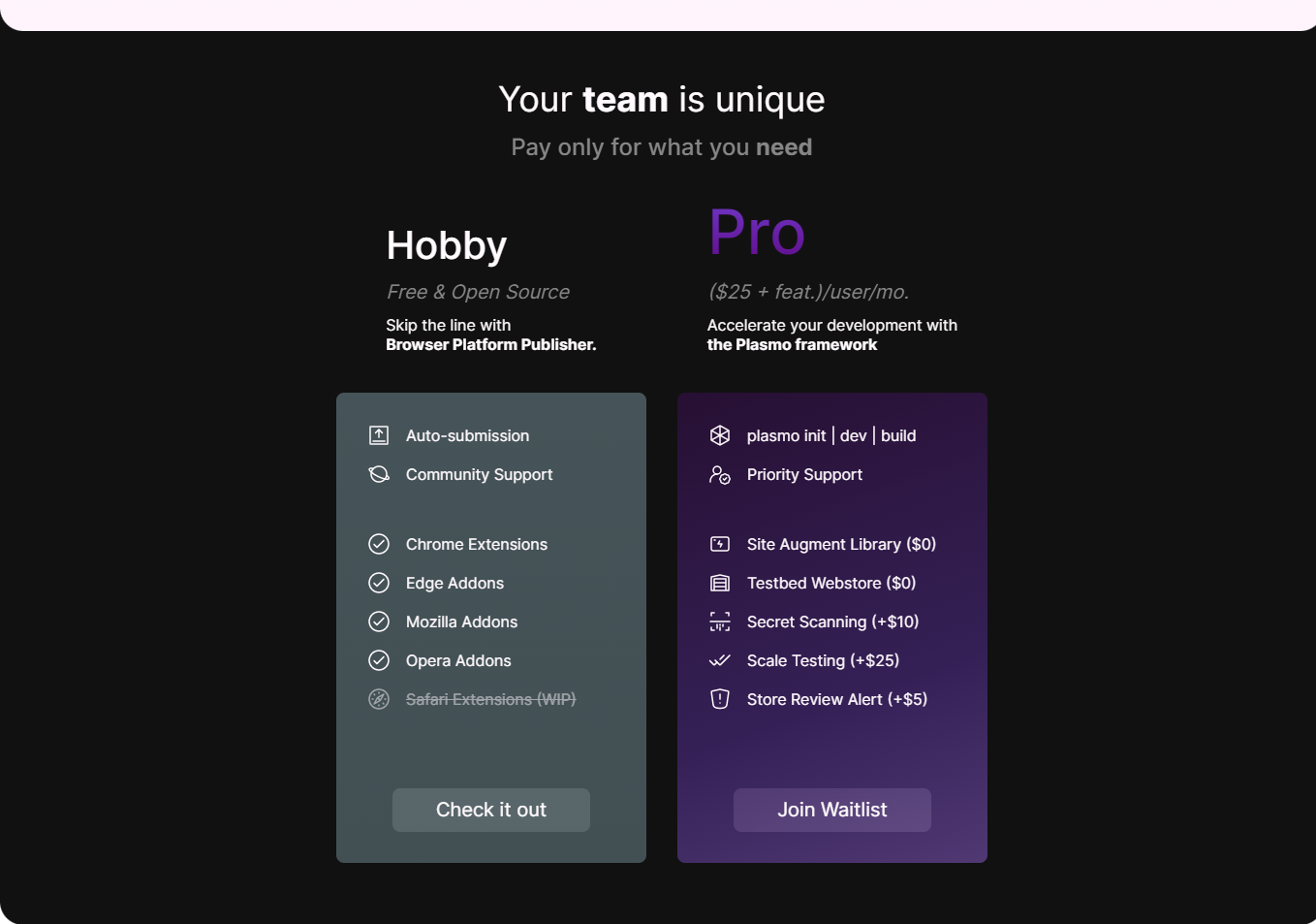

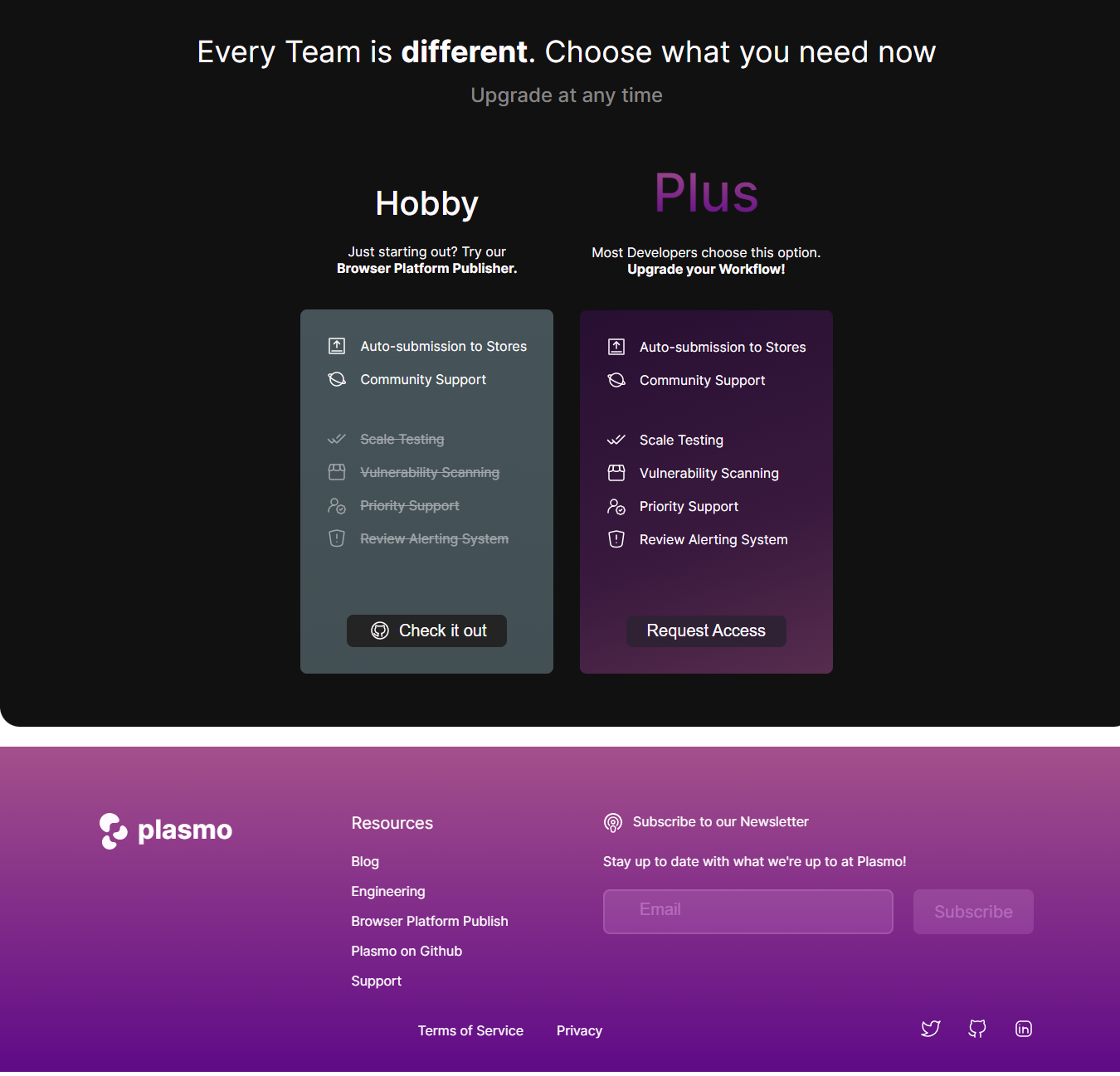

22:00, we took some time off to refine the pricing page on fronti in preparation for the launch of init. We also found a couple bug with dev regarding the file it generate in aiding the dev server setup. We are on our way to mitigate this issue asap. A patch should lands on plasmo@0.6.0 within 15 minutes.

19:30, we are spending time tidying up some chore: upgrading dependencies for the bpp ship cluster, updating their readmes, as well as renaming cwu to chrome-webstore-api

18:00, we discovered that pnpm publish does not include .gitignore in the bundle, even if that file was present in the template directory. We will simply have to geenrate this file ourselves.

17:30, we are releasing plasmo build next-new-tab command under plasmo@0.4.0. This command will streamline the conversion from a nextjs statically exported site into a browser extension for new tab.

05:40, we just migrated some of the logic from dev to build. Some rough edge with how we are generating the build artifact directory, but generally everything should be working. We will now use this to ship mice.

02:20, we are almost ready to launch plasmo init. The scaffolding and code generation is functional. We are publishing the beta version of plasmo to the npm registry for testing, as well as to releasing some of our shared config/schema. We are also contemplating a quadrant-awared framework to cloak a warpdrive with utility for each space region, which would come in handy for the creation of new interstellar spacecraft.

2022.04.19

21:30, we are deploying mice to our quadrant now. Basic functionality has been tested and we confirmed that mice was able to establish a connection for a galatic empire warpdrive to connect and take over control of another lion forkcraft.

17:00, we fixed an issue with update module which did not update the local hash cache, resulting in constant update check prompt on reboot.

14:00, we realigned on mice and the idea for a nextjs based augmented warp, and are looking to integrate that into our build module.

06:20, we just got the initial connection working for mice. It was a full trickle connection with minimal exchange between ships. We will now polishing mice a bit more with actual energy transfer beyond the connection, as well as tidy up the user interface to interact with mice.

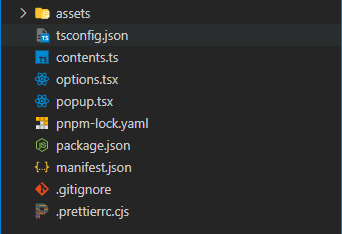

04:26, a sneakpeak at the future of spacecraft augmentation: use what you need, extends as you go. Sane configuration, minimal dependencies, and smart engineering tools that know how you will modify your ship. We plan to support nested directory structure as we scale out this approach. For the time being, we will use it to move forward with deploying the mice space station.

03:56, we are rediscovering webrtc with mice and the concept of trickle signaling. So far our handling of the signaling process has been missing this piece. We will need to queue up the signal and send them wholesale. Most other space station would send them via a websocket connection, however for mice we would like the connection to be established without the need of a centralized third base (the TURNS/ICE in between can be decentralized hence the "techncially P2P nature of WebRTC"). Let us hope the amount of hop can be done via UX alone.

2022.04.18

22:09, we might makes an interesting case for contents script to have name that make sense, preventing the index.ts pattern naming. We would like the dev experience to be as seamless as possible, meaning configuration in a single file. To do so, the source itself should export its config. To capture this change, we will employ a monitor to watch and produce the final source for compilation. The key concern in our mind is how to parse the source for a preliminary evaluation, stripping out its configuration code, then use that to generate the manifest, in the most efficient way possible.

21:40, we are on our way to switch over to content pipeline. The dynamic nature of this pipeline made me want to plan out how we might chart out a mapping between the underlying vessel and its desired path. Maybe we can parse each timescript files to inject the manifest, or this would be a good time to consider our own custom manifest schema or config file.

20:30, we have confirmed mice is now up and running abroad the lion forkcraft instead of the galatic's warpdrive. With some tweak to dev, we were able to establish connection on top of the popup protocol. To sustain the actual connection however, we might need to use the content channel. We also upgraded mice and init to leverage react18 API.

13:05, we reached a good milestone for dev - the star path laid out so far is now functional for popup ship to sail. mice is now warping on this trail under mv3 engine. We will launch a simulation of this station's capability inside the galatic empire issued interstellar warpdrive later today.

08:37, we realized that we can optimize the hailing speed of our ship hull by gating the module loading behind a function call. This improved our hailing frequency speed by at least 80% compared to our previous design. We will soon transfer this knowledge over to our box starbase's engine to also improve its speed.

08:30, we are considering the build module, which can be used to packup mice for internal distribution. The work for this was largely done via itero, we just need to rewire the engine to supply energy to this new module.

08:00, we are using a bottom-up approach to build out the scaffolding for init as we working on mice. We added a couple of helper for automated asset generation. Some sane default for styling and entry point is being constructed. I am slightly concerned about the amount of opinion we are exerting on this framework... given how flexible the path could be. Personally, I really want to get mice up and running as quick as possible - this would be a test for the framework's limit itself.

04:30, we noticed a lack of rat-signal only rtc comms channel. We will leverage the dev coordinate to deploy a new spacestation codenamed mice. mice will acts as a proxy between any 2 spacecrafts thorough the entire universe to establish a minimal comms channel. We expect this initiative to take no less than 8 hours of effort.

01:00, we are moving forward to the dev coordinate while charting out the federation space. A bit of a reorganization was done to ensure change in command is centralized (which would be more efficient than having to update in two places). This bring us to a discussion regarding enum vs raw string mapping. An enum makes it easier to refactor the variable, however using it in a map would make for verboseful code (because we will first need to specify the enum, then re-instate it in ou map). Since the typing system can inform us when type is broken, we decided to remove the enum and stick to just a map for now.

2022.04.17

20:00, we considered launching a small shuttlecraft hovering the #1DA1F2 quadrant to monitor our clone's representation in this space. Apparently, the permit we acquired 2 years ago were revoked and rendered obsolete. We had to apply for a new license, however it will take a while for the station to review our application. In the mean time, we launched a direct manual override of L's clone to reduce its noise ratio, which trimmed ~700 signal sources. We shall now resume course toward init.

16:30, we are discussing some idea around coupling background/service code into the same module files, in a similar fashion to nextjs's getServerSideProps. The order of abstraction is huge, but it might provide a convenient way for developer to offload intensive work. Potentially we can provide several background process "container" that they can simply import to get the feature for their extension. Tho, we also wanted to make sure that the extension framework is "productive." Giving dev too much choices, is a burden to their product.

04:00, we added another simple regex to generate an embed pointing to the log date header link for our Discord webhook announcer.

02:40, using regex101, we were able to replace our mdx image components with plain markdown for Discord rendering. We also added better styling to our large code blocks, which remove the need for a separate parent container.

00:54, implemented correct color conversion from HEX -> DECIMAL to convert our theme color's value to Discord's embed color. Code as follow:

const convertHexToDecimalColor = (hexColor: string) =>

[5, 3, 1]

.map((o) => hexColor.slice(o, o + 2))

.map((c) => parseInt(c, 16))

.reduce((acc, cur, index) => acc + cur * Math.pow(256, index))

// In: #713ACA

const hexColor = Color.palette.purple

// Out: 7420618

const decColor = convertHexToDecimalColor(hexColor)

2022.04.16

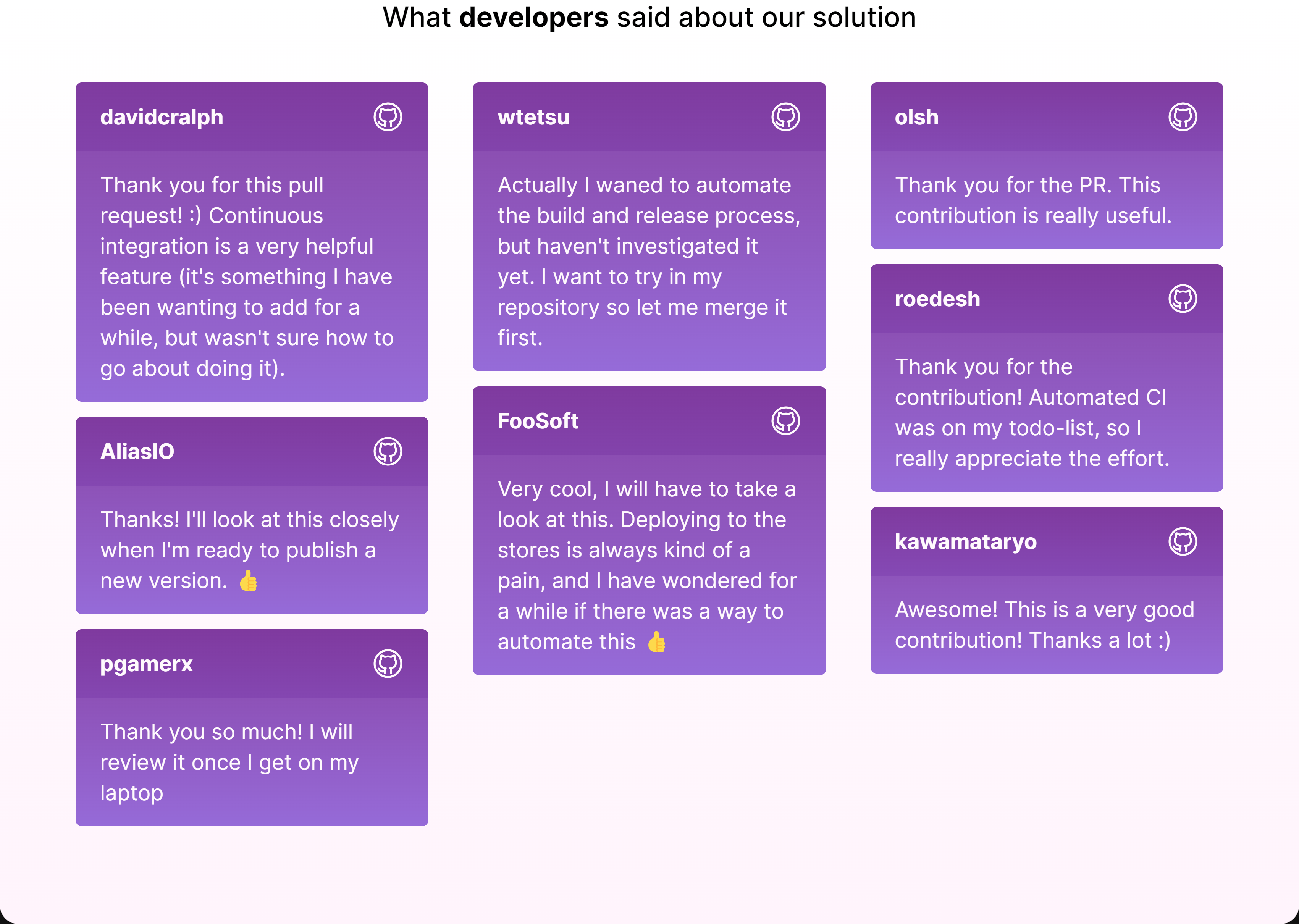

23:30, we are taking a small break to work on fronti. The main thing was adding the rest of the github comments related to bpp. It was fun to play around with media-query to ensure the column cards aligned well as we resize the screen. As the size of the card is dynamic related to the width, and its content might change, the adjustment to make it actually look good was a bit more involved than we thought. We are happy with the final result!

21:44, wrapping up the basic init script. We are revisiting our old shuttle craft reporter for the scaffolding of its structure. There are much we can extract from this: the build setup, dev server, and the file structure. We tend to abstract away most of this setup, so that new craft made from its prefab can be even more lightweight. For our current setup, we will be using parcel under the hood, with base react UI. We are thinking about message passing between the service scripts and the frontends. We think it should be modern and future forward - likely a form of RPC, or shared array buffer, or some form of native message passing.

21:00, we are thinking about where to host the scaffolding for our framework. Potentially, we can pack it with our cli, but that would be quite cumbersome for distribution. Expo stores their template under an open repository, whereas nextjs store 2 basic template inside their create cli. It might be best to start out with bootstrapping a functional frame first, then abstract and packaging it into our framework.

19:00, resuming course on the framework. It seems some people in the quadrant proposed interest in background process. However, such practice will be deprecated by mv3 policy from the galatic empire. We likely want to incorporate the latest API, and recently maintained specs, rather than catering to arcane specs. We think that for our next warp jump, this framework must be a strong foothold foundation, with sane default, that promoting the creation of new starship in the system.

18:10, we created a notification mechanism for our engineering-log (sending to a Discord webhook). It took a bit to make it efficient and not redundant. Since vercel does not provide a way to access the cached build state, what we did to walk around this limitation is to simply query our deployed log in production environment. With that, we can calculate the diff between the two version and generate a notification appropriately. This ensures our notification deliver only just the latest log entry.

02:20, we took a break to build a feed generator for this engineering log, available here: RSS, Atom, JSON.

2022.04.15

Update B.14

23:48, we are slowing down to reconvene our infrastructure for the next warp jump. After a deep dive into the firebase issue, we have managed to mitigate the problem by simply using the firebaseui library directly in our code base. Initializing the underlying FirebaseUI library inside a functional component was quite simple using useEffect. The only caution is to make sure one instance of the ui was created, which can be detected by storing the current instance in a ref.

21:20, rough day. The crew had no sleep, but we managed to incorporate much of out work into a our warp jump. There was some hiccup on the road, and a huge bump at the end, but we managed to fly pass them.

17:40, hailed @_DanielSinclair with some update on swu. We will be testing out this cargo ship in the next few days after we finalized our Apple dev account.

15:00, warp 9, we are recovering from a 4 hours disruption. We are leveraging our core provider to launch scale testing. If all goes according to plan, we will have result showing on itero soon.

05:00, scale driver pipe functional for itero to launch system. As we investigating ways to make use of the driver's internal module for itero's channel propeller, we found that it was impossible to package playwright properly for the vercel api runner (which is based on AWS lambda container). The packaged chromium binary for AWS has certain issue, it also requires puppeteer to be a direct dependency. After trying to consume it from our api, it was futile.

03:00, the upgrade to react@18 rendered itero broken. The cause was due to the auth context de-sync. Somehow, it was unmounted on render, even after we dynamically load them (without SSR). It is likely there is an issue with the way these component are being mounted that is not compatible with react@18. We issued a quick fix to revert react back to 17 for now as we are trying to approach warpspeed.

2022.04.14

22:00, all hands on deck for a warp 11 jump - we are focusing all our energy on itero. Our goal is to setup scale driver and security scanning. Some uncertainty regarding how we may consume our own library within our system. We shall test and see.

15:39, we are charting a course for the init sector. We would like the course to be as smooth as possible, freeing merchants from thinking about the detail when traveling through this path. Being able to switch between old and new engine might be a desired feature, however with the current galatic empire's ruling and the recently announced policy, it might be better to ignore the old engine, since it will be outlawed by end of year.

06:00, as we finalizing our refactor, we found that the linting process are duplicated for some of our modules (as the front hull and lower deck are connected). This might justify the removal of linting in the lower deck and delegate that over to the more mission critical front line. The tsc check for each module is also taking considerable amount of time (>20s linting run), which would no doubt tamper with our productivity.

04:00, we are responding to some foss probes. It seems they are assessing whether if our ship is benevolent or malicious. These probes are quite cautious as they approaching us. I think it will take a while to convince them that we came in peace. Some probe classified our open cargo as "potentially dangerous." Let's hope by being open with our intention, they will be more open to us and our offer to help.

Copyright (c) 2022 Plasmo Corp. <foss@plasmo.com> (https://www.plasmo.com) and contributors

00:14, we have updated the copyright notice for some of our FOSS projects after reviewing the MIT license. There is indeed a copyright clause in this license, where the original author's copyright notice is to be mentioned. This is something we indeed overlooked when launching some of our foss hard forks, where we did not just copy code over from the original project, but incorporate bit and pieces of them in our own rewrite. Regardless, we ought to respect the original's author copyright. If you found us violating your license/copyright, please do not hesitate to make an issue or email us at foss at plasmo dot com.

2022.04.13

21:16, we are on our way to refactoring our codebase for react@18. While doing so, we also upgraded our linting tools. Our ship comprises of 2 main modules: a lower engine packages deck, and an apps front hull. While running the linter on the lower deck, we noticed the setup could be a bit simpler by centralizing the eslint config. However, upon doing so, I realized that would prevent turborepo from caching the linting result for this compartment, which, as this deck scale further out, would be inefficient. I think we will reverse course and setup per-module config for this case. It will make configuration a bit more verbose, but it will allow our ship to scale.

18:00, as we were upgrading from react@17 -> react@18, I found out that the use of FC type from react has been completely deprecated, in favor of a self-declared generic props type. I am grateful that we caught this issue early on with our codebase, thus fixing it would be quite trivial. However, it would be a headache to refactor on a much larger codebase. The key take away from this, I think, is that if my codebase were to rely on an external dependencies (be it by type or by value) in more than 5 of my own modules, it should be a practice to encapsulate that package into an interface with which I have control over. Thus when it comes to refactor, I only have to do so at the top level interface.

16:47, we are heading back to the central plasmo codebase for a revise of a new probe project named xstore. There was some logic that required untangling. We also discussed a bit about linting on pre-commit, to which I think that's a bit early. We have CI pipeline to do those linting for us already, and I am not quite sure if the linter would catch TS specific error... If we were to setup such a pre-commit linter, we will want to add tsc linting for additional typecheck.

14:16, we sent a PR to the chrome-webstore-upload repo with an updated token generation flow.

02:19, we added a simple linking mechanism for the engineering log, which should streamline referencing the log entries. As I was thinking about how to work with our log entries in the future, I feel like some reader might be confused by the order of which this log should be intepreted. We can certainly produce a chronological ordered log by reversing the rendering order of the log entries, or a how-to article on how to work with this log. For the time being, it should be safe to table this thought.

01:00, we released gcp-refresh-token, source code here.

pnpm dlx gcp-refresh-token

00:32, we are rebranding bpp from Browser Plugin Publisher to Browser Platform Publisher, as Plug-ins is a term used to referencing the obsoleted method of adding feature to the browser.

2022.04.12

23:44, we are almost ready to publish gcp-refresh-token. Testing this module turned out to be quite a challenge, as the refresh token flow requires user to navigate to the authentication page, sign-in with their credentials, before the code is then sent to a loopback server for the cli to use.

21:00, the esbuild bundle of gcp-refresh-token is quite large (~800kb). We will need to switch to tsup to exclude dependencies. One issue with tsup is that its abstraction does not work well in an intermixing between cjs and esm, where the final cjs bundle cannot import unbundled esm module. We will try a pure ESM module package.

15:23, we have located a good package name for the tool: gcp-refresh-token. We have spent the last couple of week researching prior arts regarding this work, and have found inspiration from many repository targeting google drive and google photos. We are now setting straight course toward the releasing of gcp-refresh-token, and I doubt we will see any blocker on our way. We will be releasing the tool under the MIT license.

05:07, we have refactored the plasmo cli structure to be more flexible for future sub-commands. As we contemplating the future, we cannot help but think about turbo-repo and esbuild, the two popular dev tools that are both based in go. We understood the power of go, and its cross-platform capability, and its ecosystem. However, adding another language to the techstack would be a set back that we are not sure we are willing to take, given how much there are sill need to be done. We are discussing with extension developers around the new chrome refresh token retrieval issue. I think this is a high priority issue, since there is a clear need for this tool we're developing to be available to devs now. We expect the flow to be quite similar to Expo or Figma signin, with a loopback server running on a pre-defined port that will be used to retrieve the refresh token.

00:25, image fade-up component has been implemented. I believe we have had enough fun on this shoreleave fronti project - a necessary break from our more technically challenging work. We are setting course for a new project under the itero line of product, a framework that streamline everything about extension development. It would have similar constraint to nextjs, and it would enable extension developer speeds they never thought possible.

2022.04.11

21:28, we applied the new theme to the front web-app. dark-purple was quite prevalent on the old design, I found it a bit hard to part way with it completely. It is still being used alongside the new normal and light purple.

20:05, refined the side image component for the engineering log. It should reduce the friction of adding new screenshot now.

18:00, we have gathered ample feedback regarding the new color scheme from our friends. The new color scheme is indeed favored over the new one. We shall begin incorporate it into our theme.

13:00, we are reviewing @markusmoetz's latest design iteration for fronti. I appreciate the experimentation and refinement, however, personally I don't like the new shade of purple as it is a bit neutral to my eyes. I have transmitted a screenshot of the new design to some friends of mine. I will wait for their thought, as I think more about how to optimize the site. One issue that requires urgent attention, is the way our image are being loaded into the page. It seems we will need to optimize them a bit more, and have a better fade-in strategy for these assets.

2022.04.10

18:01, I'm contemplating moving the engineering log directory outside of apps/fronti-web/public/engineering. The path is quite complex, and might be inconvenient to work with for new engineer... However, the screenshots directory would have to be managed separately. This entails a separate script to move the screenshot to the right directory where it can be found by the mdx engine...

15:00, the captain made a case for installing grammarly, however its support with vscode is no quite there yet. Hopefully we will see the official extension sometime this summer. Refer to: znck/grammarly

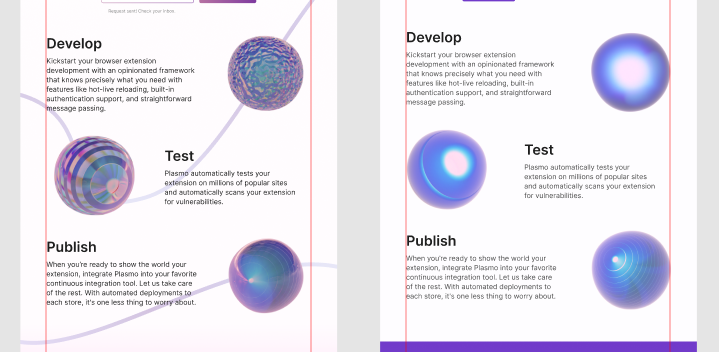

02:11, mobile responsive design is largely done for fronti. I have also went ahead and replace unicode emoji with svg icons for the footer. Also fixed up some simple grammatical errors.

2022.04.09

17:30, we are en-route to get mobile styling ready for fronti. Upon further investigation, it might be interesting to have a reusable media query mechanism to reduce the needed work. The syntax I'm thinking of, is something of a fallback mechanism. I think accomplishing this might be a bit tricky. It might be beneficial to take a look at how the css function does its job in emotion and study its internal mechanism.

14:44, new shortcut: ctrl + alt + space -> go to definition. Swapping the original ctrl + alt + space to ctrl + alt + shift + space

14:38, we have integrated @markusmoetz's 3D asset into fronti for a preview run. Quite grainy, hopefully we can refine the exported quality as we progress. I have also added a new shortcut for my own use on vscode: ctrl + 1 -> workbench.action.firstEditorInGroup. This will further reduce the friction to add entry to this log.

Standup 13:00

Some more Safari Webstore Upload update from @_DanielSinclair:

fastlane matchcommand generates a huge amount of files. We wonder if we can base64 a zip of this output, and store it as a github secret. However, it seems the built-in way for fastlane is to specify a private github repo or a private storage bucket to fetch these keys. We will write a guide on how to setup the private github repo for now, and leave an open channel for future contribution to a fully encapsulated solution.

05:12, we are wrapping up some refactoring of the landing page's layout system. Renaming a couple utility to make them more consistent.

2022.04.08

Update A.13

22:49, merging the two email form turned out to be require more thought than necessary, since their UI and usecase differ quiet significantly. It seems the hook refactor is all we need, the UI should stays separted.

18:00, we are in the process of applying the new branding to all of our assets. The engineering quarter will inherit the blitz blob logo to commenmorate the foundation of our crew's experimentative spirit.

17:30, we added dynamic favicon to fronti. Will need to test on a light-themed platform.

16:30, captain's log updated with CRX_REQUIRED_PROOF_MISSING

15:00, we are now at the YC landing page critique session. It seems nobody is there. After 5 minutes, we will be able to merge with another session, so maybe we will try that option.

14:56, we created a background blend button component. The style of the background blend seems reusable with the css, thus for our next iteration, we can refactor that into an utility css for any component to consume.

14:40, we have refactored a couple of our fronti links into a centralized location. While refining the styling of the email form, it appears the hook does not work as expected. It seems fetch does not throw error when the result is non-200.

13:39, we will be showcasing fronti at the YC virtual showcase meetup. Our main goal is to get as many feedback and criticism as possible. As such, I strive to add a little bit of polishness into our application, hopefully we can earn some respect within our peers.

13:32, I have decided to invert this log's order. This log mainly serves our crew to keep track of our internal progress, while informing the public be the secondary goal. To serve the primary goal, it is crucial that the act of logging has as little friction as possible. With this new order, the timestamp in hour, e.g 13:36, will serves as a section marker, so that reader can easily keep track and follow along. I'm tempted to use a shorter form of the timestamp, e.g 1336, however that might easily confuse reader with year.

Standup 13:00

We are close to launch Safari Webstore Upload. Thinking more about how much parameters we can pass down to the library, and whether we should try to provide more features. @stefan and I think that we should focus on the upload functionality, and if this library were to be embraced by the open source community, so will its feature expansion.

02:16, integrate the hook into the old form just to get it functional.

00:59, we're approaching the completion of the email form hook. It seems our crew were a bit reluctant to use TypeScript enum. I do recall there was an issue with enum where their type is not quite inferable when being used as dictionary. Perhaps this might be the distinction: when we need to store simple state, enum is perfectly fine. However, when constructing a dictionary, that could be reused to map out into broader dictionary with same keys, then an enum might not be the right tool for the job.

As we draw closer to the completion of the email form hook, we will be merging the two email form that we made for fronti, adding in dynamic styling to ensure it can be used for both location.

2022.04.07

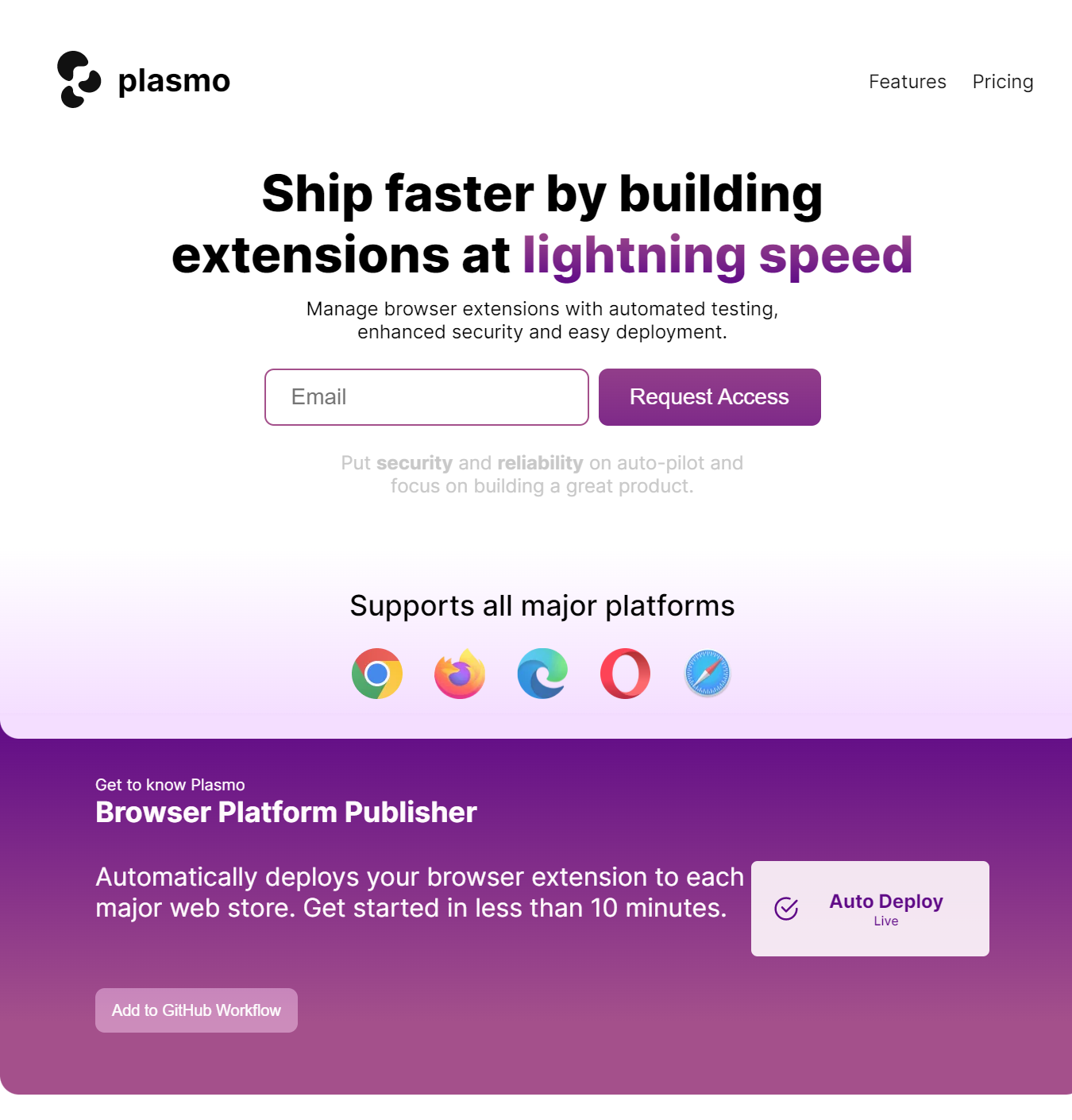

As of 13:02, we implemented the basic semantic layout for fronti, as well as the detailed design for 3 sections:

- HeaderSection

- HeroSection

- BppProductSection

Standup 13:11

More progress over at Safari Webstore Upload, discussing options, env variable, passing down to the library

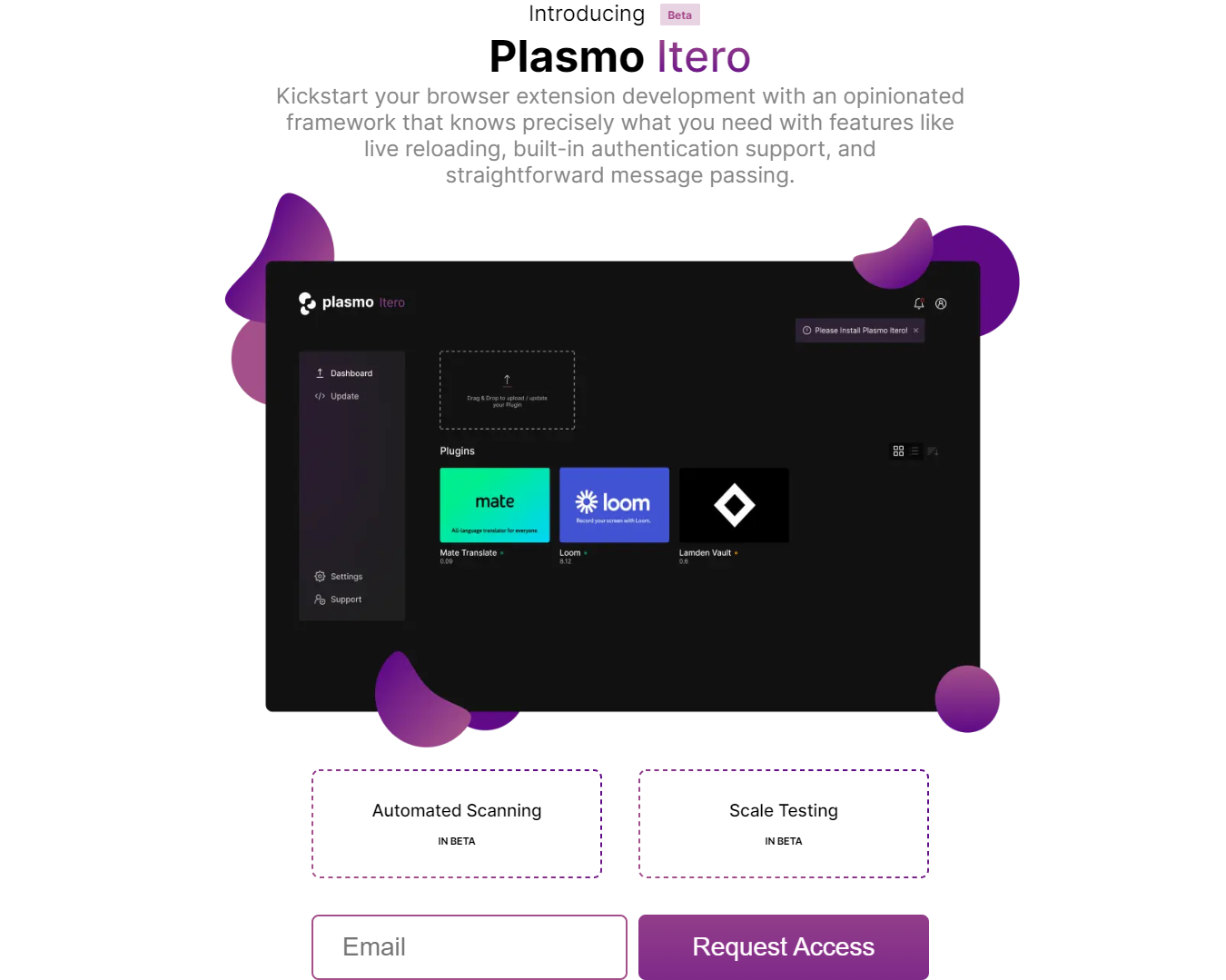

There's a screenshot section with a static png of our Itero web app. Since it's a png, scaling to extra large screen is quite an issue. I'm not too sure if we would like to keep it a static width/height at minimum. Improvement to this, I think would be to separate the 3 layers composing this section, and have a parallax like effect as user scroll through, so that they see some interesting depth of field.

There is a bit of a defect with the coloring of the Add to GitHub Workflow action button. Perhaps @stefan can fix that.

Yellow alert 15:00

There is a 200ms delay for the css to actually be applied on Fronti

Hypothesis 1: this is an issue with emotion's SSR. This failed, even after we created our own emotion cache with a custom namespace.

Hypothesis 2: a non-SSR provider might have shadowed the emotion engine, preventing it from extracting critical css. This was wrong, as we try to reorder and remove some of the providers and test them on deployments.

Since we have more pressing matter to solve, we decided to simply add a fade-in animation for the initial-load. This is a strategy learned from game-development: when in perils, fake it, especially when it works.

The fade-in effect completely diverted the viewer from seeing the css jagging of the overall page, and also give our page an interesting welcome transition.

Cancel Yellow alert 16:00

As of 17:27, we implemented 2 more sections:

- IteroProductSection

- TrioSection

17:56, we found an issue with the plain dark favicon. It does not look good on dark theme browser tab bar. We are thinking of ways to mitigate this issue, either by dynamically swapping out the favicon, or by adding some contrast to the dark one.

18:01, we altered our subscribe api endpoint to use letterdrop instead of our old call to ghost. We tested it using the legacy reporter app.

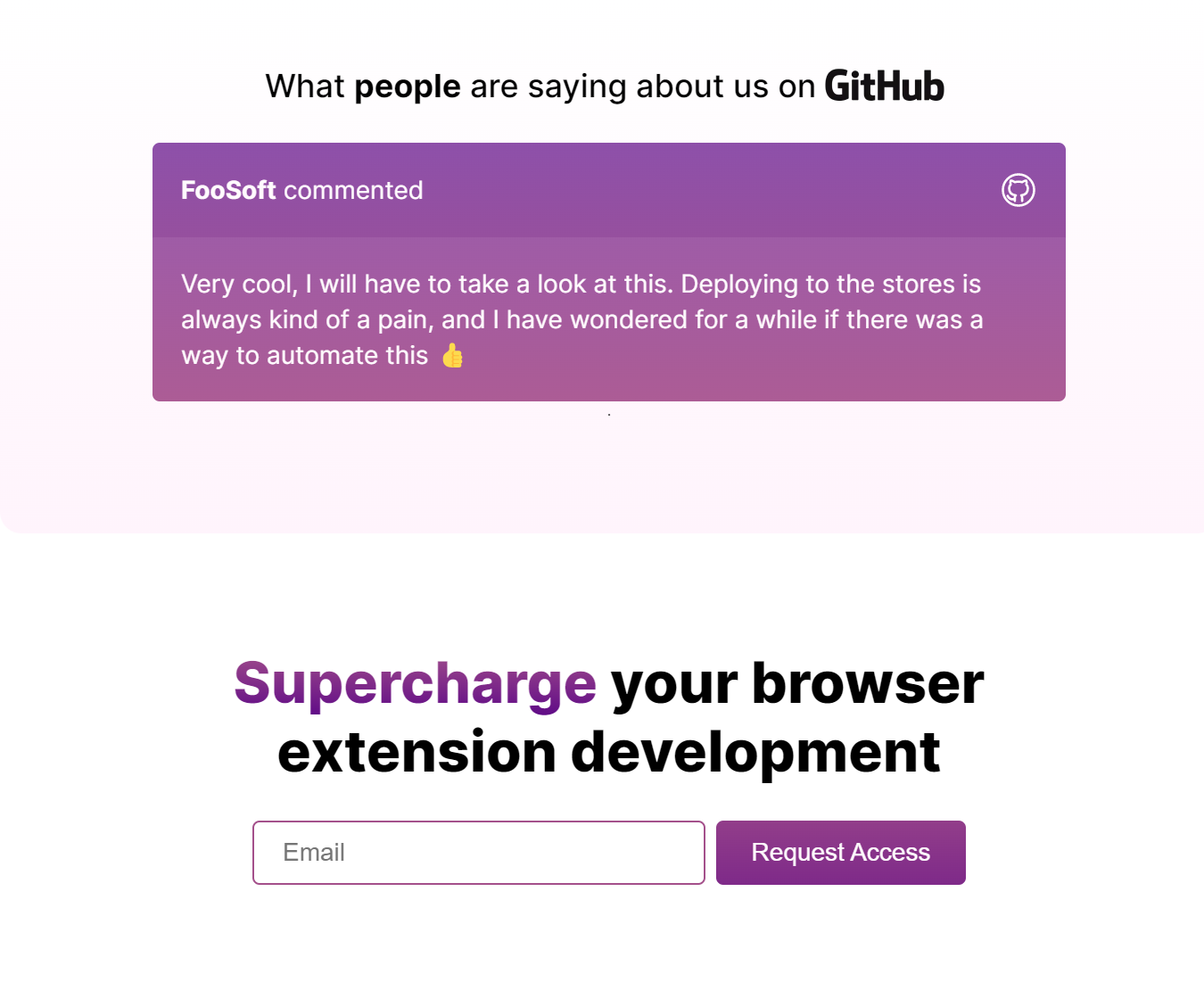

18:26, we implemented 2 more sections:

- GitHubCommentSection

- SuperchargeSection

20:59, the last 2 sections are done:

- PricingSection

- FooterSection

From this point, we can now take a breather and focus on polishing up our site, fixing the rough edges, and adding some fancy animations. A couple annoyance that we might want to tackle:

- The mix-blend-mode buttons component can be engineered to be a one-for-all solution. With this component, our button can take the color of its parent without bleeding the effect to its text.

- The email API need to be incorporated into every email form used thorough our site. This will be a light refactor.

Yellow alert 21:29

The SOC2 checklist is not functional, the state storage is completely broken on production.

The SOC2 checklist is potentially broken due to the switch over to node-object-hash module. We know that localstorage cannot handle too long a key, thus too long a hash might have broken it. We should investigate the output of these hashes...

We fixed the issue by hashing the passed down key. The key we was passing down, apparently, was stringified into an object, which returned the same string Object [object], thus resulted in key collisions.

soc2 is back online again.

Cancel Yellow alert 23:11

2022.04.06

We just signed off on the design made by @markusmoetz. With some component frozen, we're now enroute to implement them in our fronti-web project.

We will move the current page over to /legacy/lane, together with /legacy/saas, /legacy/reporter and /legacy/reporter-pricing.

The process we will use to implement the figma designs is as follows:

- Rough layout using semantic HTML with all text contents inplace

- Determine recurrent components, refactor and reuse them

- Add fidelity design for each section, from easiest to most difficult

Sidenote 20:19

OSINT technique, get a list of websites that link to browser extensions.

2022.04.05

${plasmo:universe}/U64eCftcNBXxHZRu3